Shvil : Collaborative Augmented Reality Land Navigation.

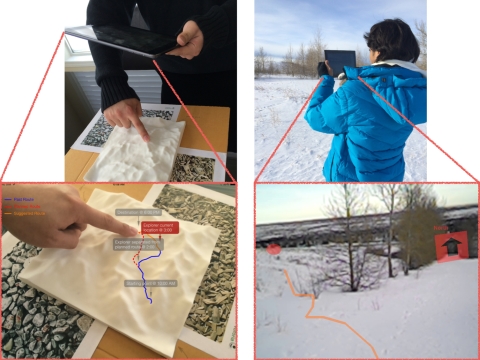

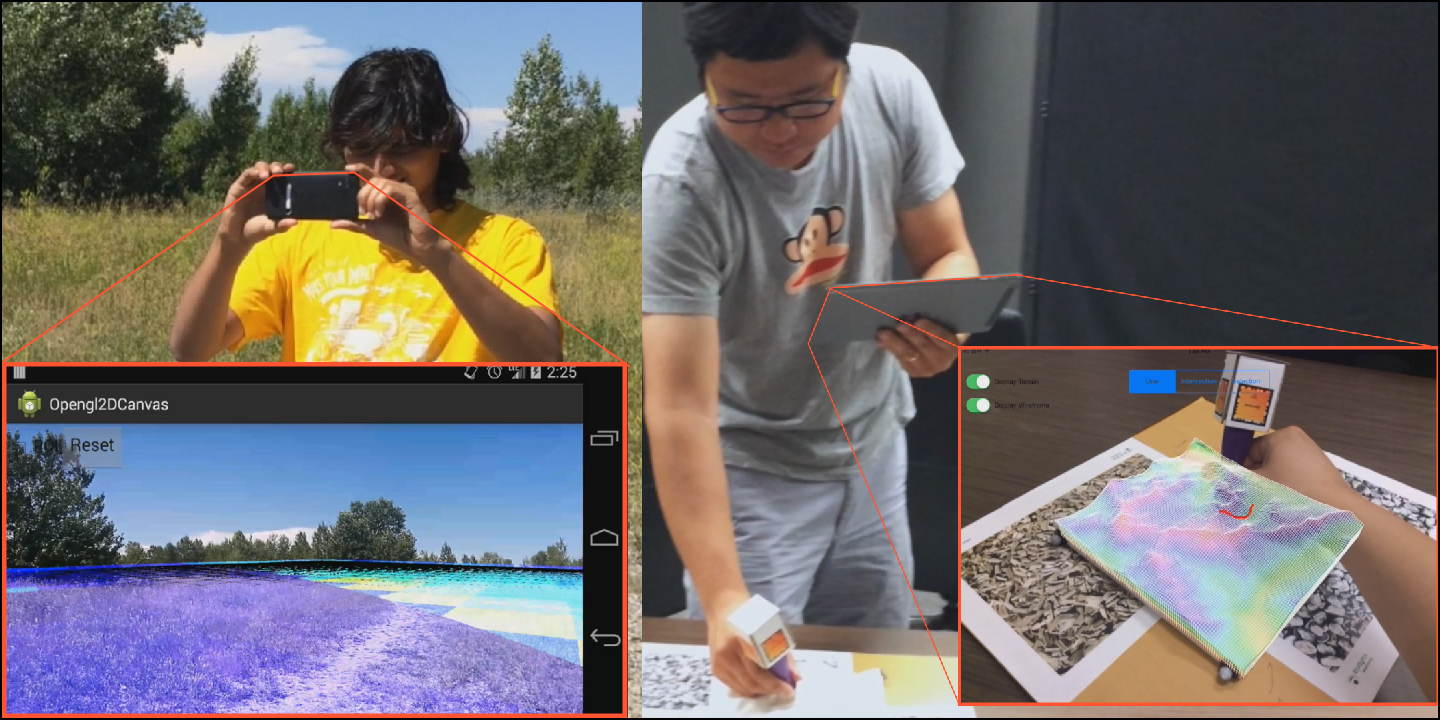

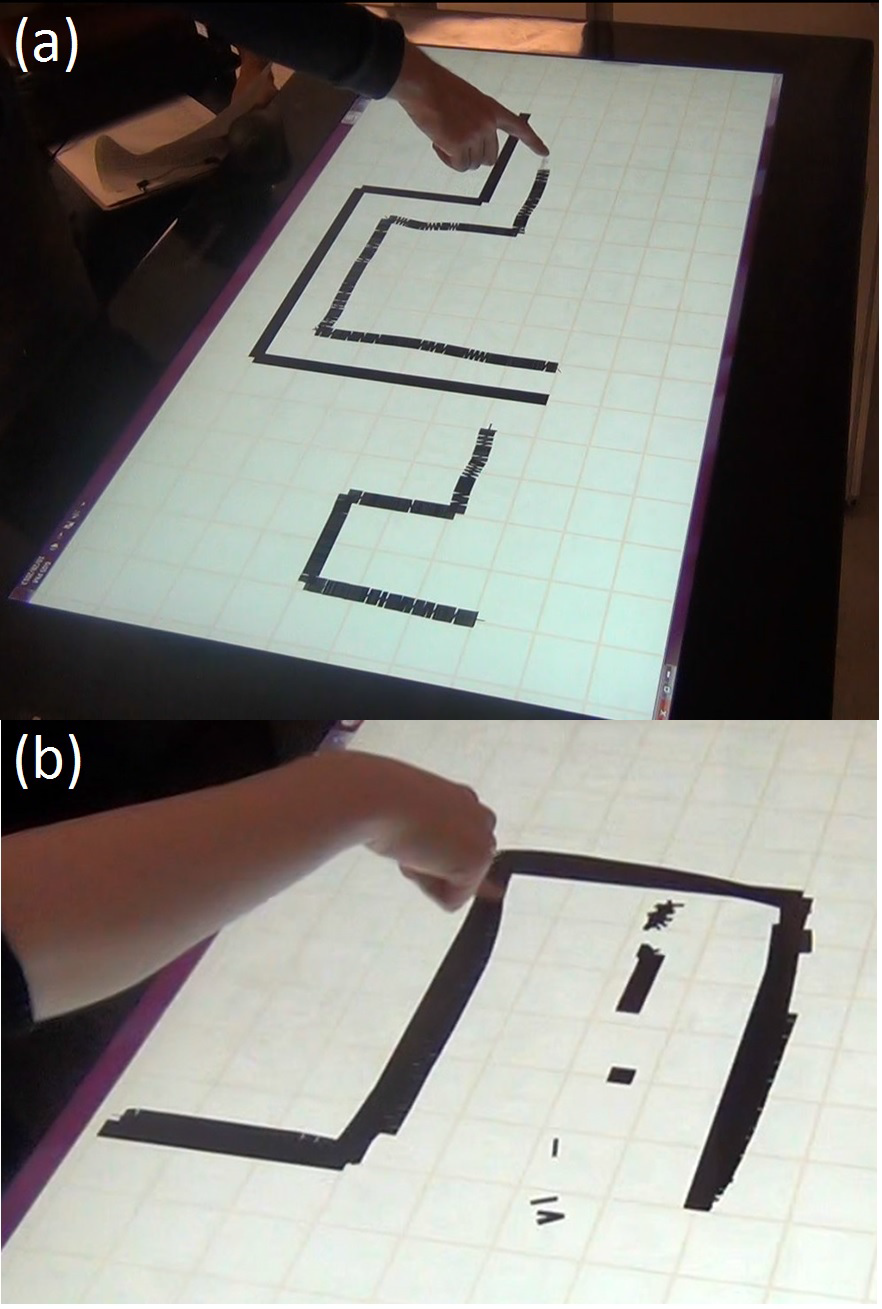

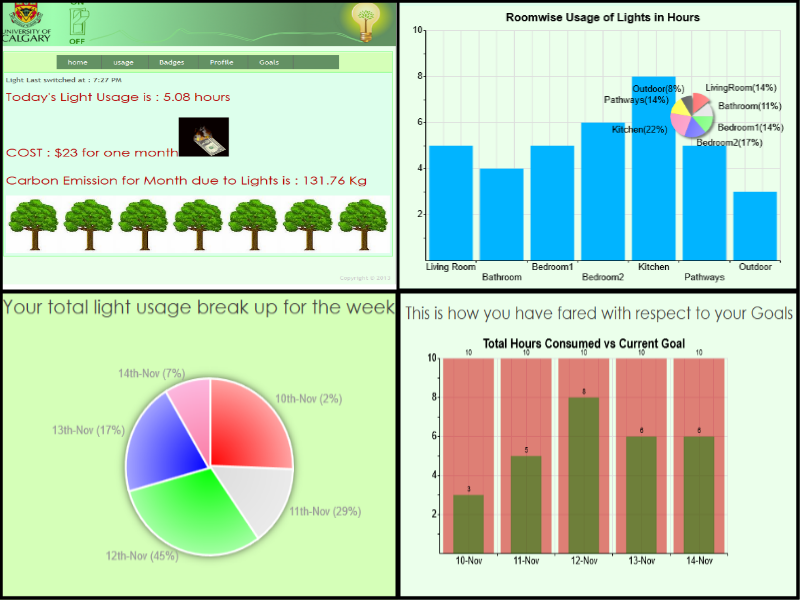

Abstract : We present our prototype of Shvil, an AugmentedReality (AR) system for collaborative land navigation.Shvil facilitates path planning and execution by creating a collaborative medium between an overseer (indoor user) and an explorer (outdoor user) using AR and 3D printing techniques. Shvil provides a remote overseer with a physical representation of the topography of the mission via a 3D printout of the terrain, and merges the physical presence of the explorer and the actions of the overseer via dynamic AR visualization. The system supports collaboration by both overlaying visual information related to the explorer on top of the overseer’s scaled-down physical representation, and overlaying visual information for the explorer in-situ as it emerges from the overseer. We report our current prototype effort and preliminary results, and our vision for the future of Shvil.

-->

-->